Background

In my work as an Ohio school data consultant, while at Youngstown City Schools, I had the honor of being a member of cohort 9 of the Strategic Data Project (SDP). This 2-year fellowship has not only taught me the basics of educational data governance, analytics, and statistical modeling, but it also has given me a much better understanding of the benefit of using data to answer questions in our school districts. As part of this graduation, I presented the results of my capstone project, titled “Teachers Or Computers – Which Are Better Predictors of Student Performance?” The answers may surprise you!

The Problems

Youngstown City School District, unfortunately, experiences quite a bit of teacher turnover every year. While not unique to Youngstown, or to other school districts for that matter, it still presents a challenge in identifying at-risk students and providing appropriate interventions for them. Prior to the 16-17 school year, Youngstown used local assessments and State screeners to identify these at-risk children at the school level, but there was nothing done at the district using a common set of criteria or standards. Further, there were very few assessments given to the students that were not dependent on the teacher’s ability to interpret the results of the assessments.

Beginning with the 16-17 school year, Youngstown began investing in a suite of tools to address this issue. First, we implemented an Early Warning System to determine a student’s status based on 3 measures: Attendance, Behavior, and Course Grades (known as the ABCs). This data was extracted, aggregated, and calculated weekly to give YCSD administrators an “On Track” status for each student. This status was then used to target the interventions needed for each student. We also invested heavily in the 17-18 and 18-19 school years on a suite of computer-based benchmark assessments, including NWEA (MAP), and Istation (ISIP).

Our teachers continue to be our most important resource, and we have also been investing heavily in instructional coaching, professional development in the area of instructional framework, and using and interpreting data. Some of our teachers believe they know their kids better than a computer-based assessment and therefore believe that they are the best predictors of performance. As mentioned earlier, we have a wide variety of teacher tenure in the district, with a mix of some veteran teachers and a relatively greater proportion of newer teachers.

My SDP capstone project attempted to determine which of these was better at predicting student performance in grades 4-8? Teacher perceptions? ABCs? Computer-based Assessments? Was the investment in computer-based assessments worthwhile? Does the accuracy depend on a teacher’s length of experience?

The Process

Early in the school year (but after a sufficient time for teachers to get to know their students), teachers were asked to predict how their students would do on the End-Of-Year assessments. Students also took the MAP and ISIP Benchmark Assessments. Finally, an “ABC” calculation was run in March to calculate their “On/Off Track” similar to the weekly calculations that were used throughout the year. These data points were compared to the actual student performance on the State assessment. A confusion matrix was created to determine the accuracy of the predictor.

Graphs

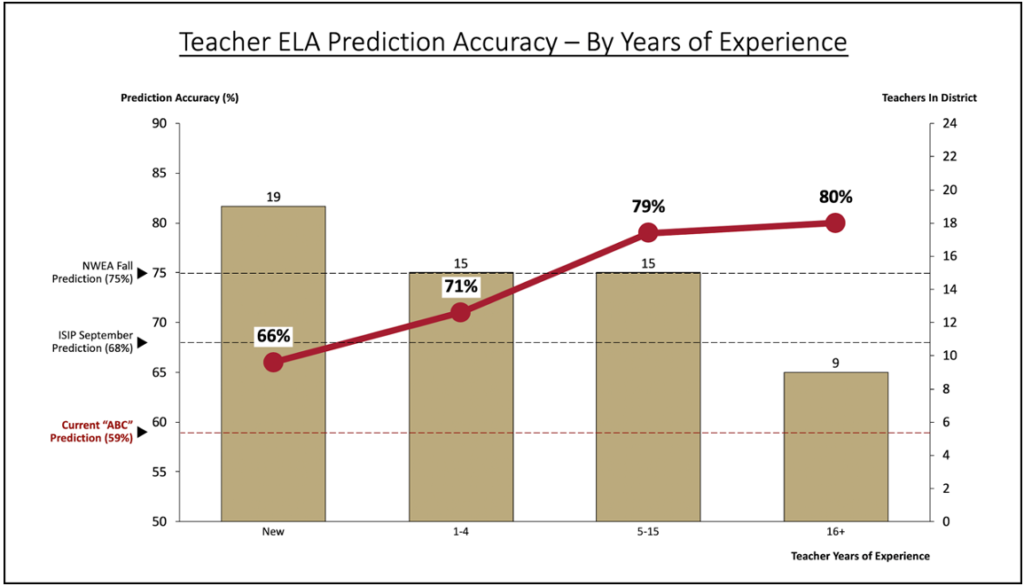

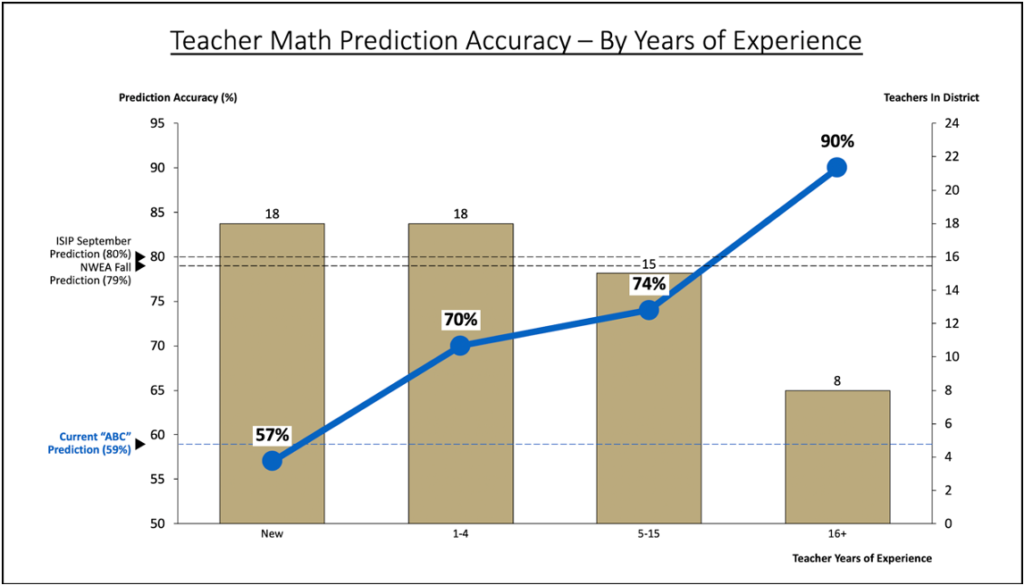

Below are the summary graphs of my findings for ELA and math as a function of a teacher’s length of experience.

Key Findings

- The accuracy of a teacher’s predictions is related to their overall length of experience in the classroom, both in Math and Reading.

- Computer-based assessments out-perform our new teachers, and generally out-perform our younger teachers, but do not outperform our most experienced teachers.

- Both teachers and computer-based assessments outperform our current “ABC” model in all areas except Math for our new teachers.

Conclusions

The investment by the Youngstown City School District in computer-based benchmark assessments was extremely valuable. Because of the significant number of new or relatively new teachers in the district, these computer-based assessments are providing a much-needed supplement to their ability to provide the proper instruction and support to their students.

At the district level, our current “ABC” method for determining “On/Off Track” is not appropriate to use in grades 4-8 as a predictor of success on the End-Of-Year assessments. While these “ABC” measures are important to track for students, they are, taken on their own, not sufficient to provide meaningful academic recommendations. My project this summer is to build a machine-learning algorithm to include more academic factors in this prediction. We will also create separate predictive measures for our High School population as well as our K-3 students, as the data points and key metrics are very different for these populations of students.

This exercise has shown all of us that it is extremely important to use all of the information at your disposal when reviewing students and their needs. Teachers remain the most important aspect of this work, but computer-based assessments can be an extremely valuable supplement, most notably for our newer teachers. While we still have a lot of work to do with respect to student proficiency in these areas, the combination of experienced teachers and computer-based, nationally-normed assessments are giving our students the best possible environment for learning.